Webscraping is a great way to collect data. Once you’ve found a page containing data you want to extract, using the Inspect feature allows you to take a look inside the HTML code for the webpage. For the example below, I’ve decided to web scrape from SNEP (Syndicat National de l’Édition Phonographique, or the National Syndicate of Phonographic Publishing) a page that gives certification to albums that have reached different sales milestones in the record industry France. This list features domestic and international albums but only awards based on album sales within France. This organization is the French equivalent of RIAA in the United States. In France, the certifications and associated necessary sales for Gold (“Or”) is 50,000 sales, Platinum (“Platine”) is 100,000. Double Platinum (“Double Platine”) is 200,000, Triple Platinum is 300,000. Diamond (“Diamant’) is 500,000, Double Diamond is 1,000,000, Triple Diamond is 1,500,000.

My code reads one page at a time, reading row by row of the html converted into text. this provides me with information such as the album name, artist name, record/distribution labels, album release day, certification, date the album certification and the number of years and months it took between album release and awarded certification. Unfortunately from this website I do not get dates of earlier certifications. For example, my program might read that an album got certified triple platinum on a given day, but I won’t see what dates that album achieved gold, platinum or double platinum.

BeautifulSoup allows us to parse from the webpage into a readable format. We clean the data and store into counters (e.g. increment certification count) lists and dictionaries. Pandas allows us to create a dataframe (essentially a table) to store the data we’ve pulled.

import requests

import pandas as pd

from bs4 import BeautifulSoup

artists = set()

artist_album = set()

artist_album_description = {}

gold_count = 0

plat_count = 0

double_plat_count = 0

triple_plat_count = 0

diam_count = 0

double_diam_count = 0

triple_diam_count = 0

#range represents which pages are pulled from

#in this example, the first 10 pages will be observed

for i in range(1,10):

#requests and pull data

url = 'https://snepmusique.com/les-certifications/page/' + str(i) +'/?categorie=Albums'

pages = requests.get(url)

soup = BeautifulSoup(pages.text, 'lxml')

#print(soup.find('div', class_= 'primary'))

#name = soup.find_all('a', class_='title')

#print(name)

#soup.find_all(class_= True)

#finds description for an individual album,

# including artist album label

descriptions = soup.find_all('div', class_='description')

dates = soup.find_all('div', class_='block_dates')

#4th on page

blocks = len(descriptions)

print("page", blocks)

for i in range(10):

total_time = 0

description = descriptions[i]

block_dates = dates[i]

block_date = block_dates.text

blo = block_date.split("\n")

#print(blo)

certifs = soup.find_all('div', class_="certif")

#4th on page

certif = certifs[i]

certif = certif.text

print(certif)

if certif == "Or":

gold_count += 1

elif certif == "Platine":

plat_count += 1

elif certif == "Double Platine":

double_plat_count += 1

elif certif == "Triple Platine":

triple_plat_count += 1

elif certif == "Diamant":

diam_count += 1

elif certif == "Double Diamond":

double_diam_count += 1

elif certif == "Triple Diamond":

triple_diam_count += 1

date_de_sortie = ""

date_dobtention = ""

duree = ""

if len(blo) == 4:

date_de_sortie = blo[1][-10:]

date_dobtention = blo[2][-10:]

duree = "< 1 mois"

else:

date_de_sortie = blo[1][-10:]

date_dobtention = blo[2][-10:]

duree = blo[3][17:]

#print("date de sortie : " + date_de_sortie)

#print("date de constat : " + date_dobtention)

#print("duree : " + duree)

description = description.text

listed = description.split("\n")

print("split:", times_list)

times_list = duree.split()

# less than one month

if "mois" in times_list and len(times_list) == 2:

total_time = times_list[0]

elif "<" in times_list:

total_time = str(0)

# at least a year

else:

if "ans" or "an" in times_list:

total_time+=12*int(times_list[0])

if len(times_list) == 4:

total_time += int(times_list[2])

elif "mois" in times_list:

total_time += int(times_list[0])

#months but less than a year

else:

total_time += int(times_list[0])

if "moi" or "mois" in times_list:

total_time+=int(times_list[2])

if "\t" in listed[1]:

listed[1] = listed[1][:-1]

if "\t" in listed[0]:

listed[0] = listed[0][:-1]

listed.append(date_de_sortie)

cert = listed.append(certif + " : " + date_dobtention)

listed.append(duree)

listed.remove("Albums")

listed.remove("")

listed.remove("")

end_time = total_time

listed.append(end_time)

print(listed)

#print(block_date)

#creates

art = listed[1]

art_alb = art + "-" + listed[0]

#adds artist to list of seen artists if not already identified

if listed[1] not in artists:

artists.add(listed[1])

#adds album to list of seen artist-albums if not already identified

if art_alb not in artist_album:

artist_album.add(art_alb)

artist_album_description[art_alb] = listed

#if album already seen, adds date to the description

else:

artist_album_description[art_alb] = artist_album_description[art_alb].append(cert)

#summary stats

#print(art_alb)

#print("\n", artist_album, "\n")

#print("\n", artists, "\n")

#print(artist_album_description)

print("Gold count: ", gold_count)

print("Platinum count: ", plat_count)

print("Double Platinum count: ", double_plat_count)

print("Triple Platinum count: ",triple_plat_count)

print("Diamond count: ", diam_count)

print("Double Diamond count: ", double_diam_count)

print("Triple Diamond count: ", triple_diam_count)

#pages = requests.get(url)

#or < platine < double platine < triple platine < diamant < ...

#gold < platinum < double platinum < triple platinum < diamond < double diamond < triple diamond < ?

#if artalb[key]

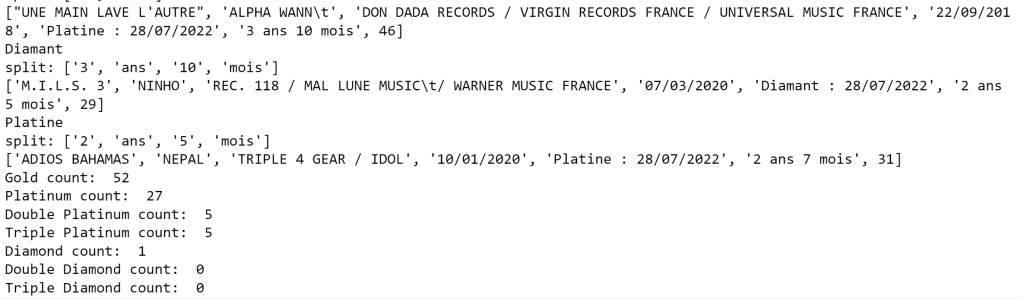

The image below is the final part of the output

In a different post, I will be showing how to use this data in order to make visualizations using pandas, matplotlib and seaborn from the data we have collected in this example.